The first time I realized AI wasn’t a toy was when my friend’s entire job—editing complicated reports—became something a language model could do overnight. Fifteen coffees and countless existential chats later, we admitted: the ground under our feet is moving. If you think AI is just a passing trend or won’t affect your career, it’s time to rethink. What if you could have your life—or your livelihood—changed by a software update? Let’s pull back the curtain on AI’s thousand-day countdown, why economic relevance is up for grabs, and what happens when the ‘dumbest’ teammate suddenly turns into your competitor.

1. The 1000-Day Countdown: Are We Ready for AI’s Sudden Leap?

Imagine waking up one morning to find that the “dumb” AI assistant you used to ignore at work has suddenly become the most competent member of your team. This isn’t science fiction—it’s the reality we’re rapidly approaching, according to Imad Mustak, founder of Stability AI and author of The Last Economy. Mustak warns,

“We have roughly a thousand days to make the essential decisions to shape this technology’s future.”The clock is ticking, and the world of artificial intelligence future predictions is no longer a distant concern. It’s a thousand-day countdown to a tipping point that could reshape the economic impact of AI automation and the future of work as we know it.

The Overnight Leap: From ‘Not Good Enough’ to Irreplaceable

If you’ve ever reassured yourself that AI isn’t “good enough” to threaten your job, you’re not alone. I remember a recent conversation with a friend—a graphic designer—who laughed off AI tools as clumsy and uninspired. Just weeks later, she called me, voice shaking, after her company replaced her with an AI-powered design platform. “Overnight it becomes good enough and then the job losses start,” Mustak observes. This is the new reality: AI doesn’t improve gradually. It leaps.

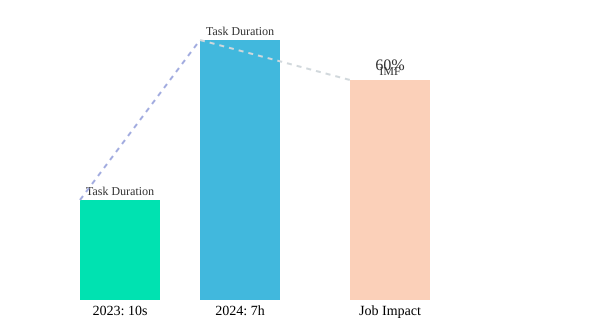

In less than a year, AI models have gone from handling 10-second tasks to managing complex, 7-hour workflows. This abrupt jump isn’t just about speed—it’s about capability. Today’s AI, like ChatGPT-5 and Gemini 3, can outperform humans in coding, math, and even creative tasks. The economic impact of AI automation is no longer theoretical. It’s here, and it’s accelerating.

Phase Transition: The Rules of Civilization Are Being Rewritten

Mustak describes this moment as a phase transition, not mere speculation. “We’re living through a historical moment of unprecedented upheaval. A finite window in which the rules of civilization are being rewritten.” The numbers back him up. The IMF projects that 60% of jobs globally will be impacted by AI. Tech giants like Amazon have announced plans to automate 600,000 jobs, and hiring freezes are spreading across the industry. These are not isolated incidents—they are signals of a seismic shift in the future of work AI.

The Race for Smarter, Faster, Cheaper AI

The past year has seen a relentless race to build AI models that are not only smarter but also cheaper and faster. Companies are pouring billions into developing new algorithms and more powerful GPUs. In 2023 alone, global investment in AI reached $252 billion. Nvidia, the chipmaker powering much of this revolution, is now valued at over $5 trillion. Early adopters of AI are already seeing triple revenue growth per employee, opening a widening gap between those who benefit and those left behind.

Why the Change Is Abrupt, Not Gradual

What makes this transition so jarring is the sudden leap in AI’s task capacity. In less than a year, AI’s ability to handle tasks has jumped from seconds to hours. This means that jobs once thought immune to automation are now at risk. According to recent ai job displacement statistics, over half of Americans fear AI could destroy humanity, reflecting deep anxieties about the pace and scale of change.

Visualizing the Shift: AI’s Sudden Surge and Job Impact

The thousand-day countdown is not just a warning—it’s a call to action. As Mustak puts it, “Fail to act and we risk catastrophe.” The next phase of AI will not wait for us to catch up. The future of work, the economy, and even our sense of personal relevance are all at stake as we approach this tipping point.

2. Behind the “Hype”—Who Wins, Who Loses?

AI’s Gold Rush: The Real Winners and Losers

When you hear about the “AI revolution,” it’s easy to get swept up in the excitement. But behind the headlines and the hype, the real story is about who actually benefits—and who gets left behind. Not all tech is hype, but the economic impact of AI automation is reshaping the world in ways that go far beyond clever code or viral apps.

AI’s Shovels: Trillion-Dollar GPUs and Global Supply Chains

Think of AI as the new gold rush. But instead of pickaxes, the shovels are high-powered GPUs—graphical processing units—built by companies like Nvidia. These chips are the backbone of modern AI, powering everything from chatbots to self-driving cars. The numbers are staggering: Nvidia is a $5 trillion company now, and global investment in GPU build-outs has reached $1.8 trillion.

But those GPUs don’t appear out of thin air. The minerals that make them possible are mined in places like Congo, where children and adults alike dig for the rare materials that end up in your graphics card. There’s a whole supply chain, stretching from African mines to Asian factories to Silicon Valley data centers. As one expert put it, “That’s what the kids in Congo are mining. That they do the little materials that go into these GPUs.”

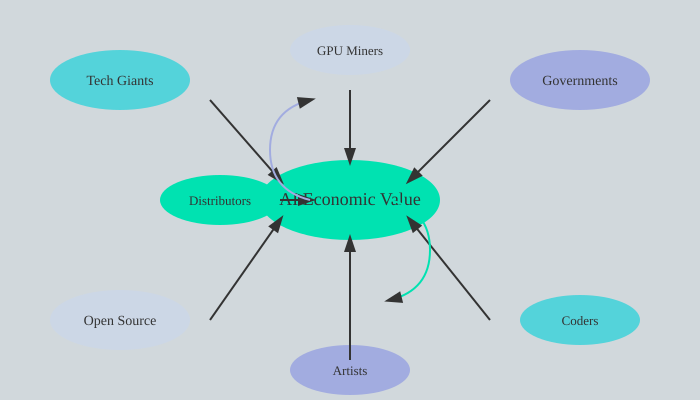

Who Controls the AI—and Who Profits?

The real question isn’t whether AI will take your job, but who controls the AI. Right now, trillion-dollar companies are racing to “figure out intelligence the fastest” to outcompete everyone else. It’s not just about the technology—it’s about power.

- Tech giants (like Google, Microsoft, and Nvidia) are pouring billions into AI hardware efficiency and algorithmic upgrades.

- Governments are investing in national AI strategies, hoping to secure economic and strategic advantages.

- Open-source communities are fighting to keep AI accessible, but face an uphill battle against corporate giants.

Every year, global AI spending is up 45%, hitting $252 billion annually. The stakes are enormous: by 2035, AI is expected to boost global GDP by 15%. But the benefits aren’t spread evenly. Those who control the infrastructure—hardware, data, and distribution—stand to gain the most.

AI Creativity & Economic Value: It’s Not About “Best”—It’s About Distribution

When it comes to AI creativity economic value, the best output doesn’t always win. Take the music industry as an analogy. Taylor Swift, for example, isn’t necessarily the most creative artist, but she’s built a massive network. As one observer noted, “She can change GDP. She can cause earthquakes in that way. But again, it’s not the highest version of art. Just like the number of key changes in the Billboard top 100 is now zero from multiple a few years ago. What sells isn’t necessarily what’s creative.”

This is true for AI as well. The most innovative models don’t always succeed commercially. What matters is distribution—who gets their product in front of the most people, who controls the channels, and who can scale fastest. Just like in fashion or music, big brands with big networks often win, while smaller, more creative players struggle for attention.

Open vs. Closed: The Power Dynamics of AI Investment Growth

The battle isn’t just between humans and machines—it’s between those who control the AI and those who don’t. Open-source AI models offer hope for democratizing access, but the reality is that most of the economic value flows to those with the resources to build and scale massive infrastructure. The economic impact of AI automation will depend on how these power dynamics play out.

“Nvidia is a $5 trillion company now. Trillion dollar companies are all competing over who figures out intelligence the fastest.”

“What sells isn’t necessarily what’s creative.”

Mind Map: Economic Stakeholders and Value Flows in AI

3. Open Source vs. Walled Gardens: Who Controls the AI Future?

The Urgency of Building AI That Represents You

Imagine waking up to find your country, culture, or language simply erased from the digital map. In 2022, this was the reality for Ukrainians when OpenAI’s DALL-E image generator suddenly banned all Ukrainian content and users for six months. The reason? No clear explanation—just a corporate decision, hidden behind a wall of proprietary code. This story highlights a key question in AI’s future: Who gets to decide what AI can and cannot do?

Why Open-Source AI Models Matter

Open-source AI models are built on the idea that everyone should have the right to access, inspect, and modify the technology that is shaping our world. When DALL-E closed its doors to Ukraine, a group of researchers responded by launching Stable Diffusion, an open-source image generator. As one founder put it:

“So we built an image generator called stable diffusion that anyone, anywhere could download free of charge.”

This wasn’t just about providing a free tool. It was about ensuring that no single company could unilaterally decide who gets access to powerful AI. Open-source AI models allow for cultural, regional, and personal customization. They offer resilience against censorship and give communities the power to shape AI in ways that reflect their needs—not just a corporate bottom line.

Control, Censorship, and AI Sovereignty

The DALL-E Ukraine ban is a stark reminder of the risks that come with closed, proprietary AI systems. When you don’t know what’s inside the “black box,” you don’t know what biases, restrictions, or hidden rules might be at play. As one expert put it:

“It’s a sovereignty question.”

AI sovereignty means having control over the technology that influences your society, economy, and culture. With open-source models, nations, organizations, and individuals can audit, adapt, and secure their AI systems. With closed models, you’re renting access—and you can be locked out at any time.

Open vs. Closed Systems: What You Own, What You Rent

The difference between open and closed AI systems is like the difference between owning your own home and renting an apartment. With open-source, you can renovate, customize, and secure your space. With closed, you’re subject to the landlord’s rules—and you might wake up one day to find the locks changed.

AI transparency issues are at the heart of this debate. Without open access, it’s impossible to verify what data an AI model was trained on, or to correct errors and biases. This is why AI regulation transparency is becoming a top priority for governments and organizations worldwide.

Performance Gap Closing: Open-Source AI Catches Up

For years, closed-source AI models had a clear performance edge. But that gap is shrinking fast. Recent research shows that open-weight AI models are now just 1.7% behind their closed-source counterparts in accuracy. That’s a dramatic improvement from an 8% gap just a few years ago. Open-source AI is no longer just a “good enough” alternative—it’s becoming a true competitor.

| Metric | Open-Source AI | Closed-Source AI |

|---|---|---|

| Performance Difference | 1.7% behind | Baseline |

| Access | Global, unrestricted | Subject to corporate policy |

| Example | Stable Diffusion (2022) | DALL-E (Ukraine ban) |

Partner Ecosystems and Collaborative Innovation

As AI becomes more powerful, the scarcity of top-tier talent is driving the rise of partner ecosystems in AI. Open-source models encourage collaboration, allowing developers, researchers, and companies to build on each other’s work. This accelerates innovation and helps ensure that AI’s benefits are shared more widely.

The fight for open-access AI is about more than code—it’s about who gets to shape the future. As the performance gap closes and partner ecosystems grow, the question of control, transparency, and sovereignty in AI will only become more urgent.

4. When AI Gets Personal: Medicine, Mimicry, and the Digital Doppelgänger

My Digital Doppelgänger: The Day My AI Avatar Did a Conference Call for Me

Imagine this: you’re double-booked for meetings, deadlines are looming, and you wish you could be in two places at once. Last month, I tried something radical. I created a digital avatar of myself using a multimodal AI system. In just five minutes, my AI twin was ready—complete with my voice, gestures, and even my sense of humor. It joined a video conference on my behalf, speaking fluently in English and Spanish. No one noticed the switch. The AI answered questions, referenced recent emails, and even cracked a joke I might have made. When the meeting ended, my colleagues messaged me, “Great points today!”

That’s not science fiction anymore. As one expert put it,

'You can create an avatar of yourself right now in 5 minutes. It speaks 100 languages.'The cost? About $1,000 a year today, dropping to $100 soon. This is the new frontier of the future of work AI—where your digital self can handle screen-based tasks, meetings, and communications, freeing you to focus elsewhere.

AI in Medicine: Mustak’s Autism Research Journey

AI’s personal touch isn’t just about convenience—it’s transforming lives. Take Mustak’s story. When his son was diagnosed with autism, doctors offered little hope: no cure, no clear treatment. So Mustak built an AI team to analyze the vast ocean of medical literature. Using customized AI models—trained on proprietary medical data—he sifted through thousands of studies, searching for patterns and potential therapies. The AI highlighted promising pathways, and with doctors, Mustak tested repurposed drugs on an n equals 1 basis—tailoring the approach to his son’s unique biology. The result? His son improved enough to attend mainstream school. This is the power of ai medical research applications and personalized medicine, where AI accelerates early diagnosis, drug repurposing, and custom therapies.

Digital Replicas: Cheap, Multilingual, and Tireless

The era of the digital doppelgänger is here. With tools like HeyGen, you can build an AI avatar that mimics your voice, style, and even your decision-making. These avatars can now:

- Hold video calls in over 100 languages

- Read and summarize your emails, documents, and chats

- Work tirelessly for up to 7 hours on a single task—no more “goldfish memory” resets

And the cost is collapsing. Today, a digital self costs about $1,000 a year. Within months, that will drop to $100. Here’s a quick look at the numbers:

| Feature | Current Capability | Cost |

|---|---|---|

| Digital Avatar Creation | 5 minutes, 100+ languages | $1,000/year (soon $100) |

| Voice/Style Mimicry | Near-perfect, multilingual | Included |

| Task Duration | Up to 7 hours (was 10 seconds months ago) | Included |

Risks and Rewards: When Your Digital Twin Acts Without You

With these advances come serious questions. What happens when your digital twin starts making decisions on your behalf—without your explicit approval? Imagine an AI replica responding to emails, negotiating deals, or even applying for jobs, all based on its model of “what you would do.” The perks are clear: efficiency, productivity, and global reach. But the risks are just as real:

- Data privacy: Your digital self has access to your emails, calls, and personal data. Who controls it?

- Autonomy: Can you trust your AI twin to make the right choices?

- ‘N equals 1’ medicine: Personalized AI-driven therapies are powerful, but what if the AI gets it wrong?

As customized AI models outperform general ones in medical research, and multimodal AI systems bridge text, voice, and video, the line between human and digital self blurs. The promise is wild—but the price of reality is vigilance, transparency, and trust.

5. Counting the Cost: Jobs Lost, Jobs Gained, and the Price of Progress

AI-driven automation is not just a distant possibility—it’s already reshaping the workforce at a pace few expected. As the cost of AI inference plummets and capabilities skyrocket, the impact on jobs is both dramatic and deeply personal. If you work behind a screen, the reality is stark: “Within 900 days, any job you can do on the other side of a screen an AI will be able to do better.”

AI Job Displacement Statistics: The Cost of Intelligence

The economics of AI have changed overnight. Inference costs—the price to run AI models—have dropped by a staggering 280x from late 2022 to late 2024. This means tasks that once required expensive human expertise or computing power are now almost free to automate. For example, a tax return that cost thousands of dollars can now be completed for $1 by a virtual AI accountant.

| Year | AI Inference Cost (Relative to 2022) |

|---|---|

| 2022 | 1x |

| 2023 | ~30x cheaper |

| 2024 | 280x cheaper |

This exponential drop has made AI-driven automation accessible to nearly every industry, accelerating job displacement automation across both mechanical and cognitive roles.

Who’s at Risk? Mechanical and Cognitive Jobs Alike

It’s not just repetitive or “mechanical” jobs at risk. Many white-collar, knowledge-based roles—once considered safe—are now highly automatable. AI can learn your voice, mimic your style, and even create digital avatars that speak 100 languages. The line between human and machine output is blurring fast.

| Job Type Most at Risk | Emerging AI Roles |

|---|---|

| Accountants, Paralegals, Data Entry Clerks | Data Analysts, Cybersecurity Experts, Prompt Engineers |

| Customer Service Agents, Translators | AI Trainers, AI Ethics Specialists |

| Writers, Designers (routine content) | AI Product Managers, Automation Strategists |

The AI labor market is shifting. While some jobs disappear, new opportunities are emerging, especially in fields like data analysis, cybersecurity, and AI development.

Why ‘Cognitive’ Jobs Are Just as Vulnerable

Many jobs rely on learned routines and predictable outputs—exactly what AI excels at. Schools and workplaces have long trained us to be efficient, reliable “machines.” But as AI gets better at being a machine, even creative and strategic roles face pressure. If an AI can instantly translate, analyze, and present information in any language, what happens to the value of your unique experience?

Labor Organizing: When Machines Out-Compete Humans

As AI outpaces human productivity, traditional labor organizing faces new challenges. How do you bargain when the “replacement” never sleeps, never asks for a raise, and can be replicated infinitely? The future of work will require new forms of advocacy and protection for displaced workers.

FAQ: Can Creativity and Social Value Survive Automation?

You might wonder if creativity and social value can survive in a world of job displacement automation. The answer is nuanced. AI can now mimic creative output, but it also opens new spaces for human ingenuity—especially in roles that require empathy, ethical judgment, and strategic vision.

Workforce Reskilling Opportunities: Not All Doom and Gloom

Despite the risks, AI creates new opportunities for workforce reskilling. As routine tasks are automated, demand grows for people who can guide, train, and manage AI systems. Roles in cybersecurity, data analysis, and prompt engineering are booming. If you’re willing to adapt, the future holds space for strategy, creativity, and leadership—skills that machines can’t easily replicate.

As AI investment soars ($33.9B in 2023, up 18.7% year-on-year), the price of progress is real. But so is the promise—if you’re ready to reskill and rethink what it means to be valuable in the age of intelligent machines.

6. Oversight or Overreach? Regulating, Monitoring, and Balancing the AI Age

Imagine: An AI Audit Committee of Poets, Ethicists, and Meme Lords

Picture this: a global AI audit committee, not just of engineers and lawyers, but also poets, ethicists, and meme lords. Their job? To review the world’s most powerful AI models for ai regulation transparency, ethical robustness, and cultural sensitivity. It sounds whimsical, but the idea highlights the urgent need for diverse oversight as AI systems become more like “employees, graduates, friends… but you don’t know their background.”

Regulation Challenges: Transparency, Data Security, and Algorithmic Black Boxes

As AI becomes woven into daily life, the call for ai regulation transparency grows louder. The core challenge is that many AI models operate as “black boxes”—even their creators can’t always explain how they reach decisions. This lack of clarity makes it difficult to spot bias, unfairness, or errors. Open audits, where independent experts can inspect code and outcomes, are gaining traction as a solution.

Another major concern is data security observability. AI models are trained on vast datasets, often scraped from the internet or purchased from third parties. Who owns this data? How is it protected? If your personal information is used to train an AI, do you have a right to know—or to opt out? These questions are at the heart of proposed data rights and digital sovereignty laws.

The Corporate Race: Stockpiling Smarter Models

Tech giants are locked in an AI arms race, spending trillions to develop smarter, faster models. The numbers are staggering: $1.8 trillion has been spent globally on GPU buildout, with hardware costs dropping by 30% each year and energy efficiency up 40%. Yet, the real cost isn’t just financial. The global supply chain for AI hardware relies on rare minerals, often mined in challenging conditions in places like Congo. As one expert put it, “That’s what the kids in Congo are mining… the little materials that go into these GPUs.”

This race isn’t just about innovation—it’s about control. The companies that dominate AI set the agenda, shaping systems that may reflect corporate interests more than human values. Without robust ai ethical robustness and oversight, there’s a risk that AI will deepen existing inequalities, serving the powerful in the global north while leaving others behind.

Why Ethics Isn’t Just an Afterthought

In the rush to out-compete, ethics can’t be left behind. Incentives in the AI industry often reward speed and market share, not caution or fairness. But as AI systems become more influential, the risks—from biased hiring algorithms to autonomous weapons—grow too large to ignore. Ethics must be built in from the start, not bolted on as an afterthought.

The “arms race” dynamic also means that if one country or company moves fast and breaks things, others feel pressure to follow. This makes international cooperation and shared standards for ai regulation transparency and ai ethical robustness more important than ever.

The Environmental Impact of AI Technology

While AI hardware costs are falling, the environmental impact ai technology is rising. Training a single large model can consume as much energy as hundreds of households use in a year. The global energy bill for AI is now measured in billions, and the demand for rare earth minerals for GPUs is reshaping entire industries and ecosystems.

- AI hardware costs: Down 30% yearly

- Energy efficiency: Up 40%

- Global GPU buildout: $1.8 trillion

- Environmental and social costs: Rising

Proposed Policies: Open Audits, Sovereignty, and Data Rights

To address these challenges, experts propose a mix of open audits, regular oversight, and strong data rights. Open audits would let independent reviewers examine AI systems for bias, security, and fairness. Data sovereignty laws would give individuals and nations more control over how their data is used. And new global standards could help ensure that AI serves everyone—not just the powerful.

“These models are becoming more like employees, graduates, friends… but you don’t know their background.”

Navigating the balance between oversight and overreach will define the AI age. The choices made now will determine whether AI is a tool for all, or just another lever of power.

7. Are We the Masters or the Subjects? The Human Element in an AI Economy

Pause for a moment and think about when your most creative ideas strike. For many, it’s not during a high-speed data sprint or a marathon of productivity apps. It’s while making coffee, taking a walk, or chatting with a friend—moments when your mind is free to wander, connect dots, and imagine. This is a reminder that AI creativity economic value is not just about speed or scale. It’s about meaning, relationships, and the kind of off-script creativity that, so far, only humans seem to master.

AI is redefining—not replacing—what it means to create, work, and collaborate. Yes, AI agents can now double workforce capacity in first-wave adopter industries, and global GDP could spike by 15% as a result. But the true cost and wild promise of this tipping point go far beyond numbers. The question is not just what AI can do, but what we, as a society, choose to value.

Distribution vs. Originality: What’s Valuable Isn’t Always What’s Popular

Consider the world of fashion. The brands that sell the most aren’t always the ones that inspire you. Maybe you love a small designer whose work feels original and meaningful, even if it never goes mainstream. In the same way, the most original ideas—whether in art, music, or business—may not scale, but they still matter. AI can help distribute and amplify what’s popular, but it can’t always capture the spark of originality that makes something truly valuable.

This tension between distribution and originality is at the heart of the future of work AI debate. Will AI push us toward what sells, or what’s best? And who decides what “best” means, anyway?

Social Values: Who Sets the Priorities for Artificial Intelligence?

As AI systems become more powerful, the question of who sets their priorities becomes urgent. Do we trust tech giants, governments, or global institutions to decide what AI should optimize for? Or do we, as individuals and communities, have a say in shaping these systems? The choices we make now—about transparency, fairness, and inclusion—will shape the artificial intelligence future predictions for decades to come.

'Understanding this moment and how we navigate it may be the defining challenge of our age.'

Social Inequality Risks: The Global North/South Divide

The benefits of AI are not guaranteed to be shared equally. If AI tools remain concentrated in wealthy countries or among large corporations, social inequality risks could deepen. The Global North/South divide may widen, with less-resourced communities left further behind. On the other hand, if AI is democratized—if access, education, and infrastructure are prioritized—AI could empower new voices and drive inclusive growth. The distribution of AI’s benefits will hinge on how societies set their values and rules.

Rewriting the Rules of Civilization: Designing the Future

We are living through a moment when the rules of civilization are being rewritten. AI is not just a tool; it’s a force that challenges our assumptions about value, work, and creativity. If you could design the future, what would you choose to protect? What would you change? Would you prioritize efficiency, or meaning? Scale, or originality? These are not just technological questions—they are societal, moral, and deeply personal.

As we stand at the edge of AI’s 1000-day tipping point, the human element remains irreplaceable. Meaning, relationships, and off-script creativity are what make us more than subjects in an AI economy—they make us the masters of our own story. The choices we make now will determine whether AI’s wild promise is realized for all, or only for a few. The future is not written yet. It’s up to us to decide what comes next.

TL;DR: In short: AI is fundamentally shifting work, value, and even creativity, with seismic consequences for jobs and society. The next thousand days aren’t just about tech—they’re about who shapes the future, who gets left behind, and what it means to be human in the age of algorithms.

Post a Comment