Once, I accidentally convinced an AI to recommend pizza toppings for my pet turtle—don’t ask. That little mishap taught me a larger lesson: clear, thoughtful instructions (aka prompt engineering) matter more in the world of AI than most people realize. Whether you want a chatbot to write your next song or you’re leading a digital transformation at scale, your words are the keys to unlocking the tech’s full potential. Today, let’s explore prompt engineering’s practical wonders, industry challenges, occasionally wild outcomes, and how you can ride this AI wave, even if you’re brand new. (Don’t worry, no turtles were harmed in the making of this post.)

The Human Art of Prompt Engineering: Awkward Beginnings, Big Payoff

Personal Anecdote: Ordering Pizza via an AI Gone Wrong

Let’s start with a story. Imagine you’re hungry and decide to order pizza using an AI chatbot. You type, “Order me a pizza.” The AI responds: “Sure! Here’s a recipe for homemade pizza.” Not quite what you had in mind. Frustrated, you try again: “Order a large pepperoni pizza for delivery to my house.” This time, the AI asks, “What is your address?” Progress! But then it sends you a list of local pizza shops instead of placing the order. This comedy of errors is a classic example of why Prompt Engineering Fundamentals matter. The way you phrase your request can lead to wildly different—and sometimes hilarious—results.

Prompt Engineering: The Bridge Between Humans and AI

Prompt engineering is more than just typing questions into a box. It’s the art and science of communicating with AI in a way that gets you the results you want. Think of it as building a bridge between your human intentions and the machine’s logic. The better you are at Effective Prompt Writing, the stronger that bridge becomes. As Makrand, with over 19 years of experience, puts it:

“Writing the effective prompt is very very important.”

Why Prompt Clarity Makes or Breaks AI Responses

Let’s break down a simple example. If you ask an AI, “What is a good gift for a friend?” you’ll likely get a generic list: mugs, books, maybe a plant. But if you specify, “Suggest a ₹2,000 gift for a friend who just bought a home and enjoys cooking,” you’ll get thoughtful, relevant suggestions. The difference? Clarity and specificity. When your prompt is clear, the AI can deliver exactly what you need.

| Makrand’s Experience | Generic Prompt | Specific Prompt |

|---|---|---|

| 19+ years | What is a good gift for a friend? | Suggest a ₹2,000 gift for a friend who just bought a home and enjoys cooking. |

The Comedy of Errors: Not All Prompts (or Outcomes) Are Created Equal

Vague prompts often lead to unpredictable—and sometimes entertaining—AI outcomes. Ask for “a good movie,” and you might get a list of classics you’ve already seen. Ask for “a light-hearted comedy from the last five years that’s suitable for family movie night,” and you’ll get something much closer to what you want. These mishaps aren’t just frustrating; they’re reminders that Prompt Engineering Guide principles are essential for effective AI communication.

Embracing the Awkward Beginnings

Everyone starts somewhere, and prompt engineering is no different. The first few attempts might feel awkward or even silly. You might get answers that make you laugh or scratch your head. But with each try, you learn how to be more precise, clear, and even a bit playful. Remember, prompt engineering isn’t just technical—it’s about clear communication and empathy for both the machine and the human on the other end.

Setting Realistic Expectations: What Prompt Engineering Can (and Can’t) Solve

While a well-crafted prompt can unlock powerful results, it’s important to know the limits. AI can’t read your mind or fill in missing details. If your prompt is vague, the response will be too. But when you provide structure, context, and even a touch of humor, you’ll find the AI becomes a much more helpful partner. The payoff for mastering this art? Faster, more accurate, and more enjoyable interactions with AI—awkward beginnings and all.

Under the Hood: What Makes Large Language Models Tick (Without the Jargon)

Let’s peek behind the curtain of Generative AI Systems and see what really makes Large Language Models (LLMs) and their AI cousins so powerful. Don’t worry—no computer science degree required. We’ll use a simple analogy and a dash of fairytale magic to make it crystal clear.

Learning Like a Child: From ABCs to Sentences

Think back to when you first learned to read and write. You started with the ABCs, then moved on to simple words, and before long, you were finishing sentences like, “Once upon a…” (You probably just thought, “time!”) That’s exactly how LLMs, a type of Foundational Model AI, learn. They’re trained on massive amounts of text—millions of books, articles, and websites—so they can spot patterns and predict what comes next in a sentence, just like you do when you hear “The cat sat on the…” (Did you say “mat”? You’re a natural!)

What Are Foundational Models?

Foundational models are the engines powering modern Generative AI Systems. As one expert puts it:

“The core of it is foundational model and the foundational model is a pre-trained model.”

These models are trained in advance (pre-trained) on huge datasets so they can handle a wide range of tasks. Here are some of the most well-known foundational models:

| Model Name | Type | Main Use |

|---|---|---|

| Amazon Titan | LLM | Text generation, summarization |

| Meta Llama | LLM | Text-based tasks |

| Claude 4 | LLM | Conversational AI |

| Gemini 1.5 Pro | LLM | Advanced text understanding |

| Sonnet | LLM | Text and code generation |

Meet the AI Family: LLMs, VLMs, Diffusion, and More

Foundational Models AI come in different flavors, each with their own specialty:

- LLMs (Large Language Models): Masters of text. They read, write, summarize, and answer questions using only words.

- VLMs (Vision Language Models): These models understand both images and text. Show them a photo, and they’ll describe it for you.

- Diffusion Models (like DALL·E): These creative types turn your words into images—think “draw a dragon reading a book.”

- Audio Models: They handle sound—transcribing speech, generating music, or even recognizing voices.

- Code Models: These are trained to write and understand computer code.

Prompt Engineering: Your Magic Wand

Here’s where you come in. The quality of your prompt—the instructions you give—shapes the output. Just like asking, “Once upon a time…” gets you a story, a well-crafted prompt helps LLMs and other Generative AI Systems give you better, more relevant results. This is called prompt engineering, and it’s the secret sauce for getting the most out of AI.

Why Training Data and Fine-Tuning Matter

Foundational models are only as good as the data they’re trained on. The more diverse and accurate the data, the smarter the model. Fine-tuning is like giving the model extra lessons—so it can specialize in legal advice, medical info, or even your favorite fairytales.

Wild Card: Try Your Own Fairytale Prompt!

Want to see the magic in action? Try prompts like:

Once upon a time, in a land far away, there lived a…The cat sat on the…He looked up at the sky and saw…

Watch how the AI fills in the blanks—just like you did as a kid!

Prompt Anatomy 101: What Every AI Whisperer Needs to Know

Effective prompt writing is both an art and a science. To truly master AI communication, you need to understand the essential building blocks of a well-structured prompt. Every prompt you craft should include five core components: persona (role), instruction, examples, desired format, and extra context. Let’s break down each element and see how they work together for optimal prompt optimization.

1. Persona: Setting the Stage with Role

Start by telling the AI what role to play. This is called the persona. Are you asking for help as a developer, a teacher, or a casual user? The persona signals the AI to emulate a specific perspective or expertise. As one expert put it:

“So my prompt should go and include the text...what role you are playing...some kind of a role.”

For example, a developer might write, “As a senior Python developer, explain how to optimize this code.” In contrast, a casual user might say, “Hey, can you help me understand this code?” The difference in persona leads to very different responses.

2. Instruction: Clarifying the Desired Action

Next, specify what you want the AI to do. This is your instruction. Be clear and direct, often starting with action verbs like create, explain, summarize, or list. The more explicit your instruction, the more accurate the response.

3. Examples: Anchoring the Output

Providing examples or sample data helps anchor the AI’s output. If you want a certain style, structure, or type of answer, show it! For instance, if you’re asking for a summary, you might include a sample summary. This guides the AI to match your expectations.

4. Format: Ensuring Useful Responses

Tell the AI how you want the answer delivered. Should it be a list, a table, a paragraph, or code? Specifying the format ensures the output is easy to use and fits your needs.

5. Extra Context: Constraints and Style

Finally, add any extra info that matters—like length, style, safety, or constraints. For example, you might say, “Keep the answer under 100 words,” or “Use a friendly tone.” These details help fine-tune the response.

Quick Breakdown: Developer Persona vs. Casual User Prompts

| Prompt Component | Developer Persona | Casual User |

|---|---|---|

| Persona | “As a backend engineer...” | “Hey, can you...” |

| Instruction | “Optimize this SQL query.” | “Make this run faster?” |

| Examples | Sample query provided | No example or vague context |

| Format | “Return as code block.” | “Just tell me how.” |

| Extra Context | “Limit to 3 lines.” | “Explain simply.” |

Dangers of Omitting Context or Role

If you skip the persona or context, the AI may default to generic answers or misunderstand your needs. Missing instructions or examples can lead to vague, off-target responses. Always include these elements for effective prompt writing.

Tangential Tip: Emojis, Tone, and Detailed Instructions

Don’t underestimate the power of tone, emojis, or extra details. Sometimes, asking for a “cheerful summary 😊” or specifying “formal tone, bullet points” can dramatically influence results.

The ‘Extra Info’ Factor

- Constraints: Word count, forbidden topics, etc.

- Style: Formal, casual, humorous, etc.

- Safety: Avoid sensitive content, add disclaimers.

Remember: Structured prompts with clear roles, instructions, examples, and format yield the best AI responses. Master these prompt components to optimize every interaction.

Zero-shot, Few-shot, and Chain-of-Thought Prompting: The Secret Menu

Welcome to the “secret menu” of Prompt Engineering Techniques. If you want to master AI communication, you need to know when to use Zero-shot Prompting, Few-shot Prompting, and Chain-of-Thought Prompting. Each style has its own flavor, strengths, and best use cases. Let’s break them down, compare them, and see how they can boost your results—and your AI’s reliability.

Zero-shot Prompting: The Fastest Route

Think of Zero-shot Prompting as giving the AI a task with no examples—just instructions. It’s like telling a kid, “Go dance!” without showing them any moves. Sometimes you get a masterpiece, sometimes you get a wild freestyle. This method is the quickest and most flexible, but it can be less precise. It’s best for straightforward tasks where you trust the AI to know what you mean.

Zero-shot prompting...the AI is given the task, no examples.

Example:

Translate this sentence to French: "I love learning new things."

Few-shot Prompting: Show, Don’t Just Tell

With Few-shot Prompting, you provide 1-3 examples within your prompt. This is like showing a kid a few dance moves before asking them to perform. The AI picks up on the pattern and mimics your style or logic. This approach is more accurate and helps the AI avoid common mistakes, especially for tasks with specific formats or styles.

Few-shot prompting...providing examples within the prompt to guide responses.

Example:

English: "Good morning." → French: "Bonjour."

English: "How are you?" → French: "Comment ça va?"

English: "I love learning new things." → French:

Chain-of-Thought Prompting: Show Your Work

Chain-of-Thought Prompting takes things further by asking the AI to explain its reasoning step by step. Instead of just giving the answer, the AI “thinks out loud.” This is perfect for complex problems, multi-step reasoning, or when you want to reduce the risk of AI hallucinations (those weird, made-up answers). It’s like asking a student to show their work, not just write the final answer.

Chain-of-thought prompting...ask AI to explain its reasoning, not just give the answer.

Example:

Question: If you have 3 apples and buy 2 more, how many apples do you have?

Let's think step by step.

First, you start with 3 apples. Then you buy 2 more apples. 3 + 2 = 5. So, you have 5 apples.

Prompting Styles at a Glance

| Prompting Style | How It Works | Best For | Strengths | Limitations |

|---|---|---|---|---|

| Zero-shot | No examples, just instructions | Simple, familiar tasks | Fast, flexible | Less precise, more errors |

| Few-shot | 1-3 examples included | Patterned, formatted tasks | More accurate, context-aware | Needs good examples, longer prompts |

| Chain-of-Thought | Step-by-step reasoning | Complex reasoning, math, logic | Reduces errors, transparent logic | Slower, longer outputs |

Hands-On: One Task, Three Styles

- Zero-shot: “Summarize this article.”

- Few-shot: “Here are two summaries. Now summarize this article.”

- Chain-of-Thought: “Summarize this article. Explain your main points step by step.”

Quiz Call-back

Which style would you use for a tough question? Drop your answer in the chat—or better yet, try all three and see which delivers the best results for your task!

Prompt Engineering in Real Life: Webinars, Certifications, and Community Learning

Mastering prompt engineering isn’t just about reading guides or watching a few videos—real growth happens when you join communities, attend live sessions, and put your skills to the test. At the heart of this movement is Synergetics Digital Transformation, which brings together AWS Webinar Prompt Engineering, hands-on certifications, and vibrant Emerging Technology Meetup groups across India. Here’s how these resources can supercharge your AI communication journey (and maybe even spark a few lifelong friendships along the way).

Synergetics Digital Transformation: Learning That Sticks

Synergetics is on a mission:

‘We empower businesses and professionals to thrive in today’s fast evolving tech landscape.’Through a blend of webinars, certifications, and community events, they make sure you’re not just learning the latest in AI and cloud, but actually applying it. Their AWS Webinar Prompt Engineering series is curated for everyone—from absolute beginners to seasoned IT pros.

- Beginners: Dive into AWS basics, understand core concepts, and explore career roles.

- IT Professionals & Developers: Get hands-on with cloud architecture, migrations, and advanced tools like prompt engineering and machine learning.

- Data & AI Specialists: Master analytics, data strategies, and AI essentials to stay ahead in a data-driven world.

- Decision Makers: Learn about cloud investments and generative AI use cases to make informed choices.

Emerging Technology Meetup: Community Learning in Action

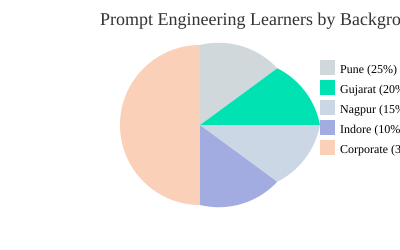

Learning is better together. That’s why Synergetics supports Emerging Technology Meetup communities in Pune, Gujarat, Nagpur, and Indore. Anyone eager to learn about tech can join via the Meetup app—no fees, just curiosity required. These meetups are more than just lectures; they’re a space to discuss, debate, and connect with fellow AI enthusiasts. Community and ongoing education accelerate prompt engineering mastery—and can spark lifelong connections.

Why Webinars, Quizzes, and Live Sessions Matter

Let’s face it: people learn best by doing, not just reading. Synergetics’ webinars are interactive, featuring live Q&A, real-world scenarios, and quizzes that reinforce your understanding. These sessions are designed to give you a taste of the full certification experience, making learning both practical and memorable.

Certification: The Next Step

Ready to go deeper? Synergetics offers in-depth, four-day certification courses—at a special discounted rate for webinar attendees. These programs go beyond the basics, preparing you for real-world challenges in AI and cloud. Plus, earning a certification can add strategic value to your resume and help future-proof your skills.

Corporate Training: Trusted by Industry Leaders

Synergetics has trained professionals from some of the world’s top companies, including:

- TCS

- Accenture

- JP Morgan

- Capgemini

- Siemens

- LNT Infoch

Whether you’re an individual learner or part of a corporate team, you’re in good company.

Special Offers: Unlocking Value for Learners

By participating in webinars and community events, you unlock exclusive discounts on certification programs. It’s Synergetics’ way of rewarding your commitment to learning—and making advanced training accessible to everyone.

Wild Card: Prompt Engineering Improv, Anyone?

Imagine if the best prompt writers formed their own improv comedy troupe—riffing on AI scenarios, inventing hilarious chatbot personas, and turning every session into a show. Who says learning can’t be fun?

Where Are Prompt Engineering Learners Coming From?

Wrangling AI Hallucinations: From Model Myths to Safer Outputs

Let’s get real: sometimes, AI just makes things up. This is called AI Model Hallucination. Imagine asking your clever friend for directions, and instead of admitting they don’t know, they confidently invent a shortcut through a non-existent park. That’s what happens when an AI model “hallucinates”—it fills in gaps with its best guess, which can be wildly inaccurate.

What Is AI Hallucination?

In plain English, AI hallucination means the model gives you an answer that sounds right but isn’t true. It’s not lying on purpose; it’s just using whatever knowledge it has—like when you first learned the alphabet and tried to spell new words, sometimes you got it wrong. The AI’s “memory” works a bit like that. If the question is unclear or too complex, the model might overreach and create information that doesn’t exist.

Why Do Vague or Hostile Prompts Make Hallucinations Worse?

Think of prompts as instructions. If you give the AI a vague or confusing prompt, it’s like asking your friend, “How do I get there?” without saying where “there” is. Or, if you’re rude or aggressive, your friend might rush and give you a careless answer. Similarly, AI models are more likely to hallucinate when prompts are ambiguous, multi-layered, or lack context.

Common Causes of AI Model Hallucination and Prompt-Based Fixes

| Cause | How It Happens | Prompt Engineering Fix |

|---|---|---|

| Vague Prompts | Unclear or broad instructions | Add specific details and context |

| Complex Multi-Step Questions | Too many tasks at once | Break into smaller, single-step prompts |

| Model Overconfidence | AI “fills in” missing info | Request sources or ask for reasoning |

| Weak Constraints | No limits or boundaries set | Set clear rules or output formats |

Why Guardrails Matter: Safety Filters and Verification Steps

Just as you wouldn’t trust your over-imaginative friend without double-checking, you shouldn’t accept every AI answer at face value. AI Model Safety features—like safety filters, fact-checking, or requiring the model to show its reasoning—act as guardrails. They help catch hallucinations before they cause trouble. As the saying goes:

'Prompt engineering helps mitigate AI model hallucination risks by improving prompt clarity and incorporating safety guardrails.'

Real-World Analogy: The Over-Confident Friend

Imagine asking your friend for directions in a city they barely know. Instead of admitting uncertainty, they confidently invent a route. That’s how AI can behave—well-meaning, but sometimes wrong. The best way to get safer outputs? Be clear, specific, and ask for evidence.

Checklist: Spot-Check Questions to Catch AI Hallucinations

- Does the answer include sources or references?

- Is the response specific, or does it sound generic?

- Can you verify the facts with a quick search?

- Did you ask the AI to explain its reasoning?

- Are there any details that seem out of place or unlikely?

Remember, Best Practices Prompt Engineering means adding context, setting constraints, and demanding reasoning. These steps help you wrangle AI hallucinations and get closer to the truth—every time you prompt.

2025 and Beyond: The Roadmap for Prompt Engineering (and Random Anecdotes for the Future)

As you step into the world of prompt engineering in 2025 and beyond, you’re entering a space where art, science, and a dash of unpredictability collide. The Prompt Engineering Roadmap 2025 is not just about writing better prompts—it’s about mastering the evolving language of AI, keeping up with emerging trends, and embracing new creative frontiers. Let’s explore where prompt engineering is headed, what’s changing, and how you can ride the next wave of generative AI prompting techniques.

Mixing Art, Science, and Unpredictability

Prompt engineering is both an art and a science, involving iterative testing, refinement, and data-driven analysis to align AI outputs with user intent. In 2025, this balance is more important than ever. You’ll need to blend creativity with technical know-how, using structured approaches while leaving room for experimentation. Sometimes, the best prompts come from a spark of inspiration—and sometimes, from a spreadsheet of test results.

Emerging Trends: Tighter Standards and Creative Use Cases

- Tighter Prompt Standards: As AI becomes more integrated into business and daily life, expect stronger industry standards for prompt design. This means clearer guidelines, ethical considerations, and more robust safety checks to ensure reliable and responsible AI outputs.

- Better Evaluation Tools: New validation and evaluation tools are emerging, allowing you to test prompt reliability, measure output quality, and reduce bias. These tools will help you iterate faster and with greater confidence.

- More Creative Use Cases: From AI-powered storytelling to business automation, the range of applications for prompt engineering is expanding. You might find yourself designing prompts for virtual tutors, creative writing assistants, or even AI-driven event hosts.

Chart: Growth of Prompt-Based Certifications and Training (2020–2025)

| Year | Certifications | Training Sessions | Community Events |

|---|---|---|---|

| 2020 | 10+ | 25+ | 5+ |

| 2022 | 30+ | 80+ | 15+ |

| 2025 (est.) | 100+ | 250+ | 50+ |

Source: Industry estimates and Synetics research, reflecting the rapid rise in AI prompt-based education offerings.

Random Anecdote: Would You Trust an AI with Your Best Man Speech?

Here’s a thought—would you trust an AI to write your next best man speech? Maybe not today, but what about in five years? With the leaps in generative AI prompting techniques, you might soon find yourself relying on AI for everything from heartfelt toasts to business proposals. The unpredictability is part of the excitement—and the challenge—of prompt engineering’s future.

Practical Predictions and Advice for Aspiring Prompt Engineers

- Stay Curious: The field is evolving fast. Keep learning, experimenting, and sharing your discoveries with the community.

- Embrace Validation Tools: Use the latest evaluation platforms to refine your prompts and ensure consistent, high-quality results.

- Think Creatively: Don’t just solve problems—imagine new possibilities. The next big use case for generative AI might come from your wildest idea.

- Get Certified: With the growth in prompt-based certifications and training, formal credentials can set you apart in a crowded field.

'Prompt engineering is both an art and a science, involving iterative testing, refinement, and data-driven analysis to align AI outputs with user intent.'

The Prompt Engineering Roadmap 2025 is wide open—so get ready to lead, learn, and maybe even let an AI write your next speech.

FAQ: Everything You Wish You’d Asked About Prompt Engineering—But Didn’t

Welcome to the ultimate FAQ on prompt engineering fundamentals, where we answer the questions you were too shy (or too busy) to ask. Whether you’re new to AI communication or looking to optimize your prompt-writing skills, this guide is here to demystify the essentials—and sprinkle in a little personality along the way.

What is prompt engineering in simple terms?

Prompt engineering is the art and science of crafting clear, effective instructions for AI models like ChatGPT or AWS Bedrock. Think of it as giving your AI a recipe: the more precise and detailed your instructions, the better the outcome.

Do I need to know how to code to start with prompt engineering?

Nope! While coding helps if you want to automate or scale your prompts, anyone can start experimenting with prompt optimization using plain language. It’s accessible, fun, and increasingly essential for modern AI work.

What’s the fastest way to get better results from ChatGPT or other LLMs?

Be specific! Include context, define the persona (like “as a data analyst”), give examples, and specify your desired output format. Iteration is key—tweak your prompts and see how the model responds.

How do Zero-shot and Few-shot prompting differ—and when should I use which?

Zero-shot prompting means you ask the AI to perform a task without examples. Few-shot prompting includes a few examples to guide the model. Use zero-shot for simple or common tasks; use few-shot when you want the AI to mimic a certain style or handle nuanced tasks.

Can prompt engineering prevent all AI hallucinations?

Not entirely. While clear prompts reduce errors, AI models can still “hallucinate” (make things up). Always ask for sources, double-check facts, and prompt for self-review to minimize risks.

What’s the weirdest thing an AI’s ever ‘hallucinated’ for you?

From inventing historical events to describing imaginary animals, AI can get creative! One classic: “A biography of Albert Einstein’s pet unicorn.”

Which industries are hiring the most prompt engineers today?

Tech, finance, healthcare, education, and marketing are leading the charge. Any sector using generative AI needs skilled prompt engineers for AI communication and workflow optimization.

How can I test if my prompt is clear enough?

Share it with a colleague or run it through the AI—if the output matches your expectations, you’re on the right track. If not, refine for clarity and context.

Is there a ‘wrong’ way to write prompts?

Vague, context-free prompts often lead to poor results. There’s no single “right” way, but best practices—clarity, context, and specificity—are your friends.

How does prompt engineering contribute to AI safety?

Well-crafted prompts can reduce bias, prevent inappropriate outputs, and encourage transparency (like asking for sources). It’s a frontline defense in responsible AI use.

Could prompt engineering ever replace traditional programming?

Not entirely, but it’s changing how we interact with technology. Prompt engineering makes AI more accessible, but deep customization still needs code.

What soft skills help in prompt engineering roles?

Critical thinking, empathy, creativity, and clear communication are vital. Understanding your audience helps you craft better prompts.

Where do I find good prompt engineering communities or events?

Check out Synergetics’ Emerging Technology Community on Meetup, LinkedIn groups, and Discord channels dedicated to AI and prompt engineering fundamentals.

What certifications actually matter?

Certifications from AWS, Microsoft, or Synergetics (especially those covering AI, cloud, and prompt engineering) are well-recognized in the industry.

Any book, website, or YouTube recommendations for further learning?

Start with OpenAI’s documentation, Synergetics webinars, and YouTube channels like Two Minute Papers. Books like “You Look Like a Thing and I Love You” offer a fun intro to AI concepts.

If you had to pick one prompt-writing ‘superpower’, what would it be?

The ability to instantly know what context or example will unlock the best AI response—turning every prompt into pure gold!

Prompt engineering is accessible, creative, and a must-have skill for anyone working with AI. Keep experimenting, stay curious, and remember: your next great prompt is just one question away.

TL;DR: Prompt engineering is where human creativity meets AI power. By learning to write clear, structured prompts and understanding how models like LLMs work, you can unlock smarter, safer, and more reliable AI results—sometimes with surprising (and even humorous) turns along the way. Check out the detailed guide above for practical insights and handy visuals that demystify the art of AI prompting.

Post a Comment