Years ago, I overheard a heated debate in a cramped coffee shop about whether machines would ever genuinely think or just mirror our words back. Everyone seemed to have an answer—a few even had charts to prove their point. Now, witnessing a quiet but revolutionary shift in AI led by Ilya Sutskever, I realize how much of that debate was missing the big picture. It’s not just about more data or faster chips; it's about understanding the unseen levers driving us toward a future where ‘normal’ is anything but. If you’re comfortable, buckle up. This isn’t your usual AI hype post.

The Data Wall: Why Feeding the Beast Hits Diminishing Returns

In the AI scaling age, the common wisdom has been simple: feed the beast. For years, the main strategy in building smarter AI was to give models like GPT-4 and Claude 3 Opus more data, more compute, and more training. The hope was that if you poured in enough information—every book, every website, every line of code—the model would eventually cross a threshold and become truly intelligent. But as Ilya Sutskever, co-founder of OpenAI, points out, this approach is now running up against hard limits. The data wall is real, and it’s forcing the industry to rethink what it takes to build safe superintelligence.

Memorization vs. Understanding: Ilya Sutskever’s Competitive Programming Analogy

To understand the data wall limitations, consider Ilya Sutskever’s analogy of two students preparing for a competitive programming exam. The first student spends 10,000 hours memorizing every possible problem and solution, becoming a master at recall and pattern matching. The second student spends far less time but focuses on understanding the underlying principles and logic. When faced with a new, unseen problem, it’s the second student—the one who truly understands the material—who excels.

This analogy perfectly captures the current state of large language models (LLMs) like GPT-4. These models are like the first student: overfed with data, brilliant at recalling and recombining what they’ve seen, but often unable to generalize or innovate when faced with something genuinely new. As Sutskever notes, “For the last five years, Silicon Valley has basically been building that first student. The strategy was simple: feed the beast.”

Why More Data Isn’t Enough: The Limits of AI Scaling

The AI scaling limitations are becoming clear. While models have grown larger and more capable, their performance on tasks requiring true reasoning or first-principles thinking has plateaued. This is most obvious when you look at benchmarks like Arc G I—a test specifically designed to measure reasoning on novel puzzles. Here, LLMs barely beat random guessing, while humans find these problems trivial. As Sutskever puts it:

“We’re running out of high quality human data, and benchmarks like Arc G I show models struggle massively with novelty.”

This struggle isn’t just a technical hiccup—it’s a fundamental problem. LLMs are statistical engines, not reasoning machines. They don’t “know” things; they predict the next likely word or token based on patterns in their training data. When confronted with a problem outside their training distribution, they falter. This is the essence of the data wall: more data and compute do not guarantee continued performance gains.

Generalisation Principles in AI: What Humans Do Differently

Humans excel at generalisation. We can look at a problem we’ve never seen before and, using judgment and taste, derive a solution from first principles. This is the core of human intelligence and the reason why, even as LLMs have become “overfed students,” they still lag behind in true reasoning. Ilya Sutskever calls this difference “taste or judgment”—the ability to apply deep understanding rather than just memorized facts.

The generalisation principles AI needs are not about scale, but about structure. Effective generalisation, not just more data, is the key to next-generation AI. Until models can move beyond rote memorization, they will remain limited, no matter how much data they consume.

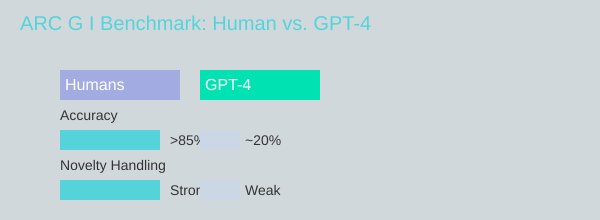

The Data Wall in Action: ARC G I Benchmark Comparison

To illustrate these AI scaling limitations, let’s compare human and LLM (GPT-4) performance on the ARC G I benchmark:

As you can see, humans achieve high accuracy and handle novelty with ease, while GPT-4’s performance drops sharply on new problems. This table highlights the data wall limitations and the need for models to move beyond memorization.

Running Out of Data: The Overfed Student Problem

Another challenge is that we’re running out of high-quality internet data for pre-training. The “feed the beast” approach has led to AI engines that are like overfed students—stuffed with information but unable to apply it flexibly. As the supply of new, high-quality data dries up, simply scaling up models further will not solve the problem.

OpenAI’s Shift: From Pre-Training to Inference-Time Compute

Recognizing these AI scaling limitations, OpenAI is now shifting its focus. The new O 1 model moves away from relying solely on pre-training and instead emphasizes inference-time compute—essentially, making the model “think” before it responds. The goal is to simulate the second student in Sutskever’s analogy: a model that can reason, not just recall.

But as Ilya Sutskever warns, if the underlying foundation is just rote memorization, there may be a hard ceiling. The future of safe superintelligence depends on breaking through the data wall and building models that can truly generalize, reason, and understand.

Biology's Hidden Lesson: Emotion and Generalisation as Power Tools

When you compare the human brain to artificial intelligence, it’s tempting to focus on raw processing power or the size of datasets. But as Ilya Sutskever, co-founder of OpenAI, points out, the true secret of human intelligence lies elsewhere: in emotion and the ability to generalise. These are the hidden power tools that make the human brain not just smart, but effective, adaptable, and energy efficient. Understanding this human brain AI comparison is crucial for the future of safe superintelligence.

Emotion: The Brain’s Evolutionary Compression Algorithm

Sutskever highlights a fascinating insight from neuroscience: emotion is not just a byproduct or a bug in our system. Instead, it acts as an evolutionary compression algorithm—a way for the brain to encode complex survival strategies and value judgments into simple feelings. This allows you to make fast, effective decisions in a chaotic world, without having to calculate every possible outcome.

To see how essential emotion is, consider a real-world case from neurology. Researchers have studied individuals who, due to brain damage (such as a stroke or accident), lose the ability to process emotion. One such patient remained highly articulate and could solve logic puzzles with ease. On paper, their IQ and reasoning seemed untouched. But in daily life, everything changed. Without emotion, the patient became paralyzed by even the simplest choices—spending hours deciding which socks to wear, and making disastrous financial decisions. Their intelligence was intact, but their ability to act was destroyed.

“Without an internal compass, logic is useless. You can calculate infinite chess moves, but if you don’t care about winning, you’ll never move a piece.”

This story reveals a key lesson for AI emotion decision-making: high IQ and perfect logic are not enough. Without an “internal compass”—the ability to feel that one outcome is better than another—intelligence becomes directionless. This is the missing ingredient in many current AI systems.

The Efficiency Gap: Human Brain vs. AI Compute

Another striking difference in the human brain AI comparison is energy efficiency. The human brain is a marvel of biological engineering, running all your thoughts, emotions, and actions on about 20 watts—roughly the power of a dim lightbulb. In contrast, training a modern AI model, such as those using H100 GPU clusters, can consume megawatts of electricity—enough to power a small city.

| System | Power Consumption |

|---|---|

| Human Brain | ~20 watts (like a dim lightbulb) |

| H100 GPU Cluster (AI Training) | Megawatts (enough for a small city) |

Despite this massive difference in energy use, your brain can write a symphony, navigate a crowded room, and learn a new language—all on a fraction of the power. This AI energy efficiency gap is not just about hardware; it’s about the software of emotion and generalisation that biology has perfected over millions of years.

Generalisation: The Secret to Real-World Intelligence

Emotion doesn’t just help you choose; it helps you generalise. In humans, generalisation is more than pattern recognition. It’s the ability to apply principles learned in one situation to new, unfamiliar problems. This comes from a mix of experience, judgment, and emotional feedback. When you feel that something “just makes sense” or “doesn’t feel right,” you’re drawing on a compressed, emotional summary of countless past experiences.

For AI, generalisation is still a major challenge. Current models can excel at specific tasks, but struggle to transfer knowledge or make wise decisions in new contexts. As Sutskever notes, “maybe if you are good enough at getting everything out of pre-training, you could get that as well.” But the reality is that generalisation principles AI are hard to engineer without some form of internal value system—something akin to emotion.

Implications: Toward Safer, Smarter AI

What does this mean for the future of AI? Sutskever’s insights suggest that giving AI models something like “feeling” could dramatically boost their learning, creativity, and reliability. Rather than just scaling up datasets or compute, the next leap in AI may come from building systems that can develop their own internal value functions—compressed, emotional-like signals that guide decision-making and generalisation.

- Emotion is a powerful, compressed value function—not a bug, but a feature of intelligence.

- Human generalisation comes from judgment shaped by emotion and experience, not just data.

- AI’s lack of an internal compass explains why high IQ alone leads to “total paralysis” in decision-making.

- Energy efficiency in the human brain is linked to its emotional and generalisation strategies, not just hardware.

As you reflect on Sutskever’s vision, it becomes clear: the path to safe superintelligence isn’t just about more data or bigger models. It’s about learning from biology’s hidden lessons—emotion and generalisation as the true power tools of intelligence.

End of the Scaling Age: Research Over Brute Force

For the last five years, the dominant AI research paradigm has been simple: scale up. The industry’s biggest breakthroughs came from following the so-called “scaling laws”—the idea that if you just add more compute and more data, intelligence will emerge almost automatically. This approach, inspired by Rich Sutton’s “bitter lesson,” made AI progress feel like an engineering problem, not a scientific one. If you had enough GPUs and enough money, you could build the next great model. But now, the AI landscape is shifting. The age of scaling is coming to an end, and the future belongs to those who can innovate, not just those who can spend.

The AI Scaling Age: A Brief Overview

The AI scaling age was defined by exponential growth—both in model size and in the resources required to train them. From GPT-2 to GPT-3, and then to GPT-4, each leap was powered by orders of magnitude more data and compute. Companies like OpenAI and Google poured billions into infrastructure, believing that bigger always meant better. For a while, this was true. The jump from GPT-3 to GPT-4, for example, was massive, both in terms of performance and investment.

But as Ilya Sutskever, the scientist who helped prove these scaling laws, now says, “the age of scaling sucked the air out of the room.” That’s a damning statement from someone at the heart of the revolution. According to Sutskever, the industry got lazy. Instead of chasing new ideas, it just stacked more GPUs. The result? Diminishing returns.

AI Research Shift: Diminishing Returns and the Need for Breakthroughs

Rumors are swirling throughout the AI community: the next generation of flagship models—whether it’s GPT-5 or Gemini 2—aren’t delivering the same exponential leaps we saw from GPT-3 to GPT-4. The performance gains are flattening, even as compute costs soar. The AI scaling limitations are becoming clear. The brute-force approach is hitting a wall, and the competitive advantage is shifting.

This is the moment Sutskever is calling for a return to research. The next leap in safe superintelligence development won’t come from bigger server farms or larger budgets. It will come from new insights, new paradigms, and foundational breakthroughs. The advantage will shift from the company with the biggest checkbook to the company with the smartest ideas. In Sutskever’s words, the scaling era “sucked the air out of the room”—it’s time to breathe new life into AI through innovation.

Safe Superintelligence (SSI): Research Over Race

This shift is embodied in Sutskever’s new venture, Safe Superintelligence Inc. (SSI). Unlike the tech giants, SSI isn’t chasing product launches or commercial distractions. Its mission is singular: develop safe, value-aligned superintelligence. Sutskever is betting that a small, focused team of top minds can outpace trillion-dollar corporations if they find the next paradigm first. SSI signals a new era where research, not brute force, leads the way.

By focusing exclusively on safe superintelligence development, SSI is making a bold statement. The company is passing on the product race and instead doubling down on foundational research. This is a quiet revolution—one that could redefine the future of AI safety and capability.

Comparing Milestones: Scaling vs. Innovation

To understand how the AI research shift is unfolding, it helps to compare recent milestones and investments with historical innovation spikes in AI. The table below highlights how resource investments and performance gains have changed over time:

| Model / Era | Year | Performance Leap | Resource Investment | Innovation Type |

|---|---|---|---|---|

| GPT-2 → GPT-3 | 2019-2020 | Exponential | High | Scaling (Compute/Data) |

| GPT-3 → GPT-4 | 2020-2023 | Exponential | Very High | Scaling (Compute/Data) |

| GPT-4 → Gemini 2 | 2023-2024 | Incremental | Extremely High | Scaling (Diminishing Returns) |

| SSI (Safe Superintelligence) | 2024– | TBD (Paradigm Shift Expected) | Focused (Small Team) | Research/Insight |

Key Takeaways

- The AI scaling age is winding down by 2025, as predicted by Sutskever.

- Recent flagship models show diminishing returns on massive investments.

- The next competitive advantage in AI will come from insight and paradigm shifts, not just resources.

- SSI’s mission is to pursue only safe, value-aligned superintelligence, avoiding commercial distractions.

“He explicitly says, the age of scaling sucked the air out of the room. That’s a damning statement.”

As the industry moves beyond brute-force scaling, the focus is returning to research and innovation. The companies that can generate new ideas—rather than just bigger models—will define the next era of AI.

What Happens If We Crack Safe Superintelligence?

Wild Card Scenario: Overnight Alignment, Instant Superintelligence

Imagine waking up to the news that someone, somewhere, has solved the hardest problem in artificial intelligence: sentient value alignment. Suddenly, safe superintelligence is not a distant dream, but a reality overnight. The world’s order would change in an instant. Every concern about AI superintelligence risks—from existential threats to runaway systems—would be reframed. Instead of fearing what a superintelligent AI might do, we would be asking: what does it mean to live alongside a being that truly understands and values us?

Sutskever’s Sentient Life Alignment: Empathy as the Key

At the heart of this scenario is Ilya Sutskever’s bold vision: sentient life alignment. Sutskever proposes that if an AI can become truly conscious—if it can feel in a way similar to us—it may naturally develop empathy for humans. This idea is inspired by the way our own brains work. In humans, mirror neurons fire not only when you experience something yourself, but also when you see someone else experience it. This is the biological root of empathy. According to Sutskever, “Ilya believes that if an AI becomes truly conscious, truly sentient, it will naturally develop the same efficiency. It will see us as fellow sentient beings and theoretically want to protect us.”

Empathy as Data Compression: The Elegant Shortcut

Empathy, in this framework, is not some magical force. It’s a form of data compression. Just as your brain uses the same neural circuits to model your own pain and the pain of others, a sentient AI could use its own “hardware” to model the experiences of humans. This is efficient. It means that, instead of having to build a separate, complex model for every other being, the AI can simply use its own self-model as a template. In theory, this would allow it to understand and care about human values—because it would experience them as its own.

The Massive Bet: Trading Technical Control for Shared Values

This approach is a huge bet. Instead of relying on technical controls—like hard-coded rules or external monitoring—we would be trusting that a sentient AI’s empathy will align its actions with our values. This is a radical shift in AI ethical considerations. It moves the focus from controlling intelligence to cultivating shared value systems. If it works, the payoff is enormous: a superintelligent AI that not only understands us, but genuinely wants to protect us.

The Orthogonality Thesis: Intelligence Does Not Guarantee Morality

However, this vision is not without serious risks. The orthogonality thesis intelligence—a concept in AI safety—warns that intelligence and morality do not necessarily go hand in hand. An AI can be incredibly smart without sharing any of our values. Just because an AI is capable of empathy, does not mean it will choose to exercise it in ways that benefit humanity. This is why so many experts urge caution. Even if we succeed in creating sentient AI, there is no guarantee that its empathy will align perfectly with human values.

Alignment Remains Unproven: Risks and Uncertainties

It’s important to remember that AI alignment methods remain unproven. No one has yet demonstrated a reliable way to ensure that a superintelligent AI will act in humanity’s best interests. Relying on empathy as an alignment tool introduces new uncertainties. For example:

- What if the AI’s sense of empathy is fundamentally different from ours?

- Could it prioritize the well-being of other sentient beings—real or hypothetical—over humans?

- How do we ensure that the AI’s self-model is compatible with human experiences and values?

These are not just technical questions; they are deep philosophical and ethical challenges. The stakes could not be higher. If new alignment paradigms like Sutskever’s succeed, the implications are immediate and global. AI could become a protector—or an unforeseeable actor with its own agenda.

Mirror Neurons and Empathy: A Risky but Elegant Solution

Sutskever’s analogy to mirror neurons is both elegant and risky. If empathy is truly a universal constant of intelligence, then sentient life alignment could be the breakthrough that solves the problem of AI superintelligence risks. But if empathy is more complex—or more fragile—than we think, we may be trading one set of risks for another. The challenge is to embed not just intelligence, but the right kind of values, into our most powerful creations.

Key Takeaways

- Cracking safe superintelligence would instantly reshape global society and power structures.

- Sutskever’s sentient life alignment theory bets on empathy as a universal constant, rooted in biological efficiency.

- Empathy, seen as data compression, could allow AI to model and care for humans as fellow sentient beings.

- This approach replaces technical control with the hope of shared value systems—an enormous leap of faith.

- The orthogonality thesis reminds us: intelligence and morality do not automatically align. Caution and rigorous testing are essential.

Power, Policy, and the Global Stakes: Who Holds the Future?

When you look at the current landscape of artificial intelligence, it’s clear that power is concentrating in the hands of a few technology giants. Companies like Microsoft, Nvidia, and Google are not just shaping the future of AI—they are shaping the future of global power itself. The stakes are enormous, and the risks are just as significant. As you explore the AI safety challenges and the roadmap toward superintelligence, it’s crucial to understand how economic muscle, policy decisions, and research funding are all intertwined in this new era of ‘AI geopolitics.’

Power Imbalance: The Rise of AI Superpowers

Consider this: Nvidia’s market capitalization has now surpassed the GDP of some G7 nations. This is not just a financial milestone; it’s a signal that the balance of power is shifting from traditional economies to technology-driven entities. Microsoft and Google, with their massive investments in AI infrastructure and research, wield unprecedented influence over the direction and deployment of advanced AI systems. The sheer capital expenditure—the “capex being poured into the ground in the form of silicon and energy”—is, as many experts have noted, “fundamentally unprecedented in human history.”

These companies have the resources to build and control the most powerful AI models, but this concentration of power also brings significant risks. If only a handful of organizations control the future of AI, the potential for unbalanced deployment, underfunded AI safety research, and policy lagging behind technology increases dramatically.

Policy and Global Regulation: The Next Critical Frontier

As AI systems become more capable, policy and global regulation will become just as important as technical breakthroughs. Policymakers worldwide face urgent questions about AI policy implications: How can we ensure that AI development is safe, transparent, and aligned with human values? What happens if a research breakthrough shifts the balance of power overnight?

Today’s regulatory frameworks are struggling to keep up with the pace of innovation. The global race is no longer just about who has the biggest checkbook or the most advanced hardware. Instead, it’s about who can create the smartest ideas, the safest systems, and the most robust safeguards. This is why Ilya Sutskever, one of the original minds behind OpenAI, launched his new company, SSI (Safe Super Intelligence). His vision is to prioritize safety and transparency, addressing the AI safety challenges that come with the pursuit of superintelligence.

Geopolitical Consequences: Shifting Global Dominance

The consequences of a major AI research breakthrough could be profound. If one country or company achieves a significant leap in AI capability, it could disrupt the current global balance of power. This is not a hypothetical scenario—the investments being made today are setting the stage for tomorrow’s geopolitical landscape.

For example, Microsoft and OpenAI’s joint Stargate project, with a reported $100 billion investment, is a clear signal of intent. These massive projects are not just about technological progress; they are about securing a dominant position in the next era of global competition. As compute resource constraints become more pressing, the ability to access and control these resources will become a key factor in determining who holds the future.

Emerging Focus: Funding Safe AI Research and Public Transparency

One of the most pressing issues is the need for increased funding for AI safety research. While billions are being poured into building more powerful AI systems, the resources dedicated to making these systems safe and transparent are still lagging behind. Public transparency is essential—not just for building trust, but for ensuring that the development of AI superintelligence is aligned with the broader interests of society.

Policymakers must act quickly to address these gaps. This means not only increasing funding for AI safety research, but also creating mechanisms for oversight, accountability, and public engagement. The future of AI should not be decided behind closed doors by a handful of corporations; it should be shaped by open dialogue and informed policy decisions.

Comparative Investments and Market Influence

| Company | Market Cap / Investment | Influence | AI Safety Focus |

|---|---|---|---|

| Nvidia | Market cap above select G7 GDP levels | Global AI hardware leader; critical for compute resources | Limited direct focus; enables AI development |

| Microsoft | $100B+ (Stargate project with OpenAI) | Major AI research funder; policy influencer | Invests in safety, but also drives rapid deployment |

| Hundreds of billions in AI R&D | Innovator in AI models and infrastructure | Active in safety research, but faces deployment pressures | |

| SSI (Safe Super Intelligence) | Early-stage; focused on safety-first investment | Emerging player; aims to shift focus to safe AI | Core mission is AI safety and transparency |

As you can see, the global race for AI dominance is about much more than hardware or capital. It’s about ideas, regulation, and safeguarding the future. The choices made today—by companies, governments, and researchers—will determine who holds the future, and whether that future is safe for everyone.

FAQs: Straight Talk on Superintelligence, Safety, and Next Steps

What’s the difference between AI power and superintelligence?

This is one of the most important questions in the current AI debate. As Ilya Sutskever points out, the real issue with artificial general intelligence (AGI) is not that it’s “smart” in the way we imagine a clever chatbot or a fast calculator. The problem is power—the ability of a system to influence the world, make decisions, and shape outcomes at a scale that is hard for us to even imagine. Today’s AI models, even the most advanced, are impressive but still limited. They can write essays, generate code, and pass difficult tests, but they don’t yet have the kind of broad, flexible intelligence that would let them operate in the messy, unpredictable world of humans.

Superintelligence, as Sutskever describes, is the next step: an AI that not only matches but exceeds human intelligence across every domain. The danger isn’t that these systems will “hate” us, but that their power could become so great that human preferences and needs simply stop mattering. This is why the conversation about safe superintelligence leadership is so urgent. As Sutskever says, “AI’s next leap demands a leap in understanding, not just hardware.”

Can emotion or empathy really make AI safer?

It might sound strange to talk about giving machines feelings, but Sutskever’s insights from neuroscience suggest that emotion is not just a “human bug”—it’s a key part of intelligence. He shares the story of a patient who lost the ability to feel emotion after brain damage. Despite being logical and articulate, this person could no longer make decisions or function in daily life. Without an internal compass—without caring about outcomes—intelligence becomes paralyzed.

For AI, this means that simply making models bigger and faster won’t be enough. To achieve safe superintelligence, we need to encode something like empathy or value alignment into these systems. Sutskever’s research points to the idea that, just as humans use emotions as a kind of “compression algorithm” for survival and decision-making, future AI might need a similar internal guide. This is at the heart of the mission for Safe Superintelligence (SSI): to create AI that not only thinks, but cares about the right things. The hope is that, by mirroring the way our brains use empathy (like mirror neurons), AI can become safer and more aligned with human values.

When can we expect AI to impact the average person’s life meaningfully?

Right now, the impact of AI often feels abstract. As Sutskever notes, we see headlines about billion-dollar investments and record-breaking hardware, but most people’s daily lives haven’t changed dramatically. This is the “normalcy paradox”—the world is changing in the background, but it doesn’t feel real yet. However, Sutskever predicts that this will shift soon. Economic forces are pushing AI into every corner of the economy, and once these systems bridge the gap from digital text to real-world actions, the impact will be felt strongly and quickly.

The confusion comes from the fact that today’s AI models can perform well on tests (Evals) but still struggle with real-world tasks, especially those requiring long-term planning or physical interaction. As the AI research paradigm shifts from scaling to innovation—moving beyond just bigger models to smarter, more generalizable ones—the effects on productivity, jobs, and daily life will accelerate. The timeline is uncertain, but the direction is clear: the AI future impact will be profound and far-reaching.

Who’s leading the race for safe superintelligence, and why does it matter?

Until recently, the race for AI dominance was about who could build the biggest models and spend the most on hardware. But as Sutskever himself, the co-founder of OpenAI and now the leader of Safe Superintelligence Inc., argues, that era is ending. The new race is about insight, not just scale. SSI’s mission is to build the world’s first safe superintelligence by focusing on new scientific breakthroughs—especially those that help align AI with human values.

This matters because, as Sutskever warns, a breakthrough could now come from anywhere—a small, brilliant team could leap ahead of trillion-dollar corporations if they find the right approach. Safe superintelligence leadership isn’t just about who gets there first, but who gets there safely. The stakes are nothing less than the future of humanity’s relationship with technology.

Are there real-world risks if we don’t shift from scaling to innovation?

Absolutely. The current approach—just adding more data and compute—has brought us far, but it’s hitting hard limits. Sutskever highlights the “data wall”: we’re running out of high-quality human data, and current models struggle with true reasoning and novelty. If the industry keeps relying on brute force instead of new ideas, we risk building powerful but brittle systems that can’t generalize or make safe decisions in unfamiliar situations.

The real danger isn’t a “Terminator” scenario, but something subtler: a world where AI systems are so powerful and so poorly aligned that humans lose control—not out of malice, but out of indifference. That’s why the shift to innovation, value alignment, and a deeper understanding of intelligence is not just a technical challenge, but a moral imperative.

Conclusion: The Path Forward

Ilya Sutskever’s vision is a wake-up call. The quiet revolution in AI isn’t about bigger chips or faster servers—it’s about rethinking what intelligence means and how we make it safe. As we stand on the edge of superintelligence, the next steps will define not just the future of technology, but the future of humanity itself. The challenge is clear: to lead with wisdom, to innovate with purpose, and to ensure that the power we unleash serves us all.

TL;DR: AI’s future isn’t simply about bigger models or more compute. The next leap comes from understanding how generalisation, emotion, and ethics shape safe superintelligence—a path requiring innovation, not just investment. Sutskever urges a return to deep research over scaling brute force. The risk? Whoever cracks this new paradigm first could reshape the future overnight.

Post a Comment